Everywhere we look, statistical models and neural networks have blossomed. Seemingly overnight, LLMs and other AI technologies have grown from fascinating curiosities to being embedded in everything, everywhere. Chatbots now handle customer service requests and teach foreign languages while large language models write dissertations for students and code for professionals.

Software companies are claiming – and seem to be realizing – gains in programmer productivity thanks to code generation by LLM-backed AIs. Language learning tools and automated translation have been revolutionized in just a few short years, and it is not hard to imagine that in the near-future, advanced artificial intelligence will be as commodified as the once-world-shaking smartphone.

AI tools provide answers that sound good and are easy for humans to consume, but struggle with a key challenge: knowing the truth. This flaw is a serious roadblock to using AI in security-critical workflows.

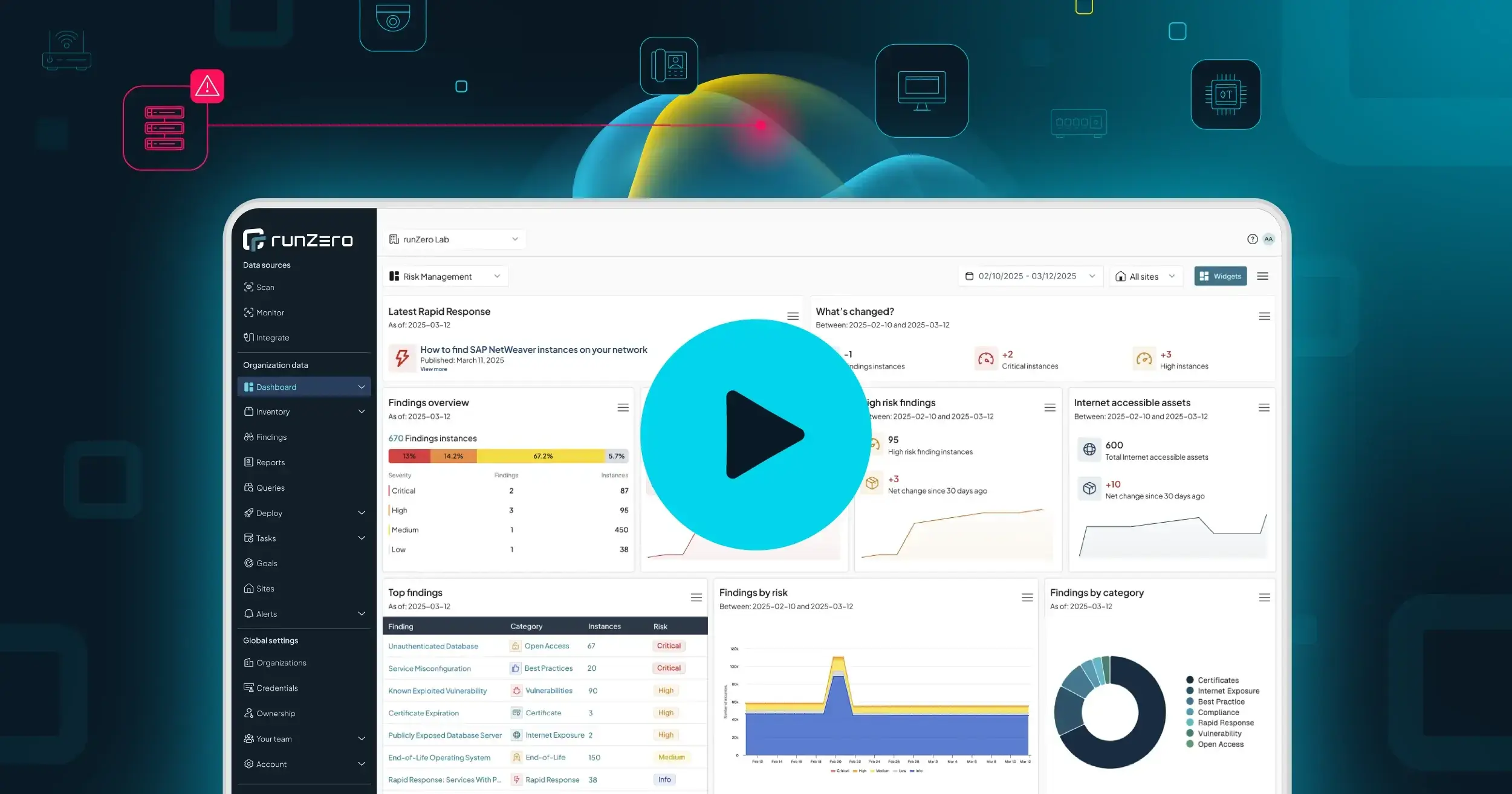

Modern AI is undoubtedly a fascinating and powerful set of technologies, but these tools are ill-suited to CAASM (cyber asset attack surface management) and vulnerability discovery efforts. runZero believes that current-generation AI is not just unhelpful for most security efforts, but can be actively harmful.

LLM verification challenges for CAASM #

LLMs have proven excellent at prediction and generation, but struggle to provide useful outcomes when the workload requires high levels of precision.

In the case of content and code generation, LLMs do well because the user can quickly verify that the output matches the intent. Does the sentence make sense? Does the code compile? These are quick tests that the user can apply to determine whether the LLM provided an accurate response.

LLM-generated data presents two problems for cyber asset attack surface management:

There is no guarantee that the claims made by the tool are accurate, or even that the specific assets or vulnerabilities exist. Careful, prompt engineering might help, but it might not.

The inference mechanisms are black boxes. There is little way to know how the detected devices relate to the provided evidence or what was skimmed over or omitted by the inference process.

In short, without an efficient way to verify the output from an LLM, it is difficult to rely on these systems for discovery automation at scale.

Slightly wrong is rarely right #

LLMs struggle with another aspect of information security; the sheer scale of data. Even an AI tool that is 99% accurate at detecting vulnerabilities and classifying assets may result in worse outcomes than not using the tool at all. A one percent gap may seem small, but modern organizations manage asset and vulnerability records in the millions and even billions.

Meaningful exposures already exist in the margins of massive datasets. For every 1,000 workstations, there may only be one exposed system; however, that system might be the single entry point an attacker needs to succeed. For situations that require knowing exactly what and where things are, systems that provide exact answers are, well, exactly what is needed.

Lies, damn lies, and statistics #

Statistical methods are beautiful applications of mathematics based on centuries of meticulous work, but the outcomes of these methods tend to be aggregate views and trends over time. Statistical models and AI tools built on these models, are great at providing high-level views, but unfortunately tend to bury the most critical exposures instead of flagging them for remediation efforts.

A great example of this is the average asset risk metric: does a single high-risk asset actually present the same risk as 10 low-risk assets? In almost all cases, the answer is no. There are times when we want to analyze generalities from the details because statistical methods are indispensable tools when it comes to reporting, overall distribution, and location of outliers. However, when we want to see exactly what assets exist, where they are, and what they do, statistical methods are less useful.

Precision Matters #

The goal of CAASM is to provide comprehensive and precise visibility into the entire organization, with a focus on minimizing exposure. The current-generation of AI tools struggle to help due to the outsized effort required to verify their results. Defenders already struggle with a deluge of noise from their tools and adding more wrong answers has a real human cost.

Statistical models, while helpful for measuring trends over time, also tend to obfuscate the most critical exposures in noise. CAASM requires precision at scale and failing to identify even one percent of an attack surface or an organizations’ assets, is not an acceptable error rate. AI tools may be helpful for report generation and data summarization, but struggle to provide the level of accuracy required to deliver on the promise of CAASM.